about 1 month ago by Dak

I Love TechYou must use AI

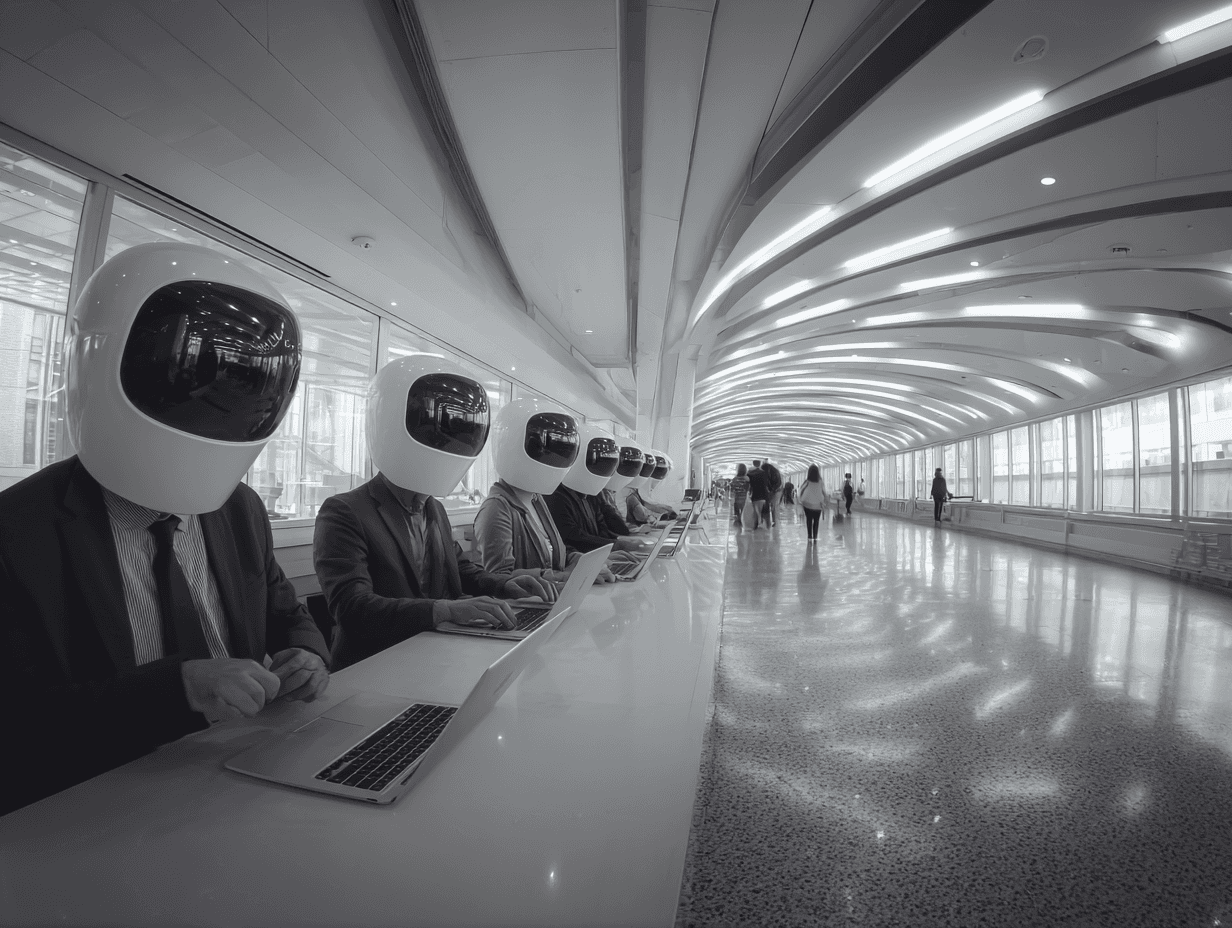

Measuring Prompts Instead of Progress Is Madness

Some tech companies have begun mandating the use of AI in employee workflows. Not just mandating, but measuring. Counting tokens, tracking prompts, tallying up whether you have used AI today or not. It is the kind of measurement that feels alien to how we have historically thought about tools at work. We have never tracked how many times someone picked up a calculator, consulted Stack Overflow, or grabbed a whiteboard. We have cared about output, not input keystrokes.

Microsoft executives have even said that “AI is now a fundamental part of how we work… it is no longer optional” (Unleash). Reports note that Microsoft is considering AI usage as part of performance reviews (Forbes). Shopify’s Tobi Lütke called AI a “fundamental expectation” (MIT CDO), and Coinbase has gone as far as firing programmers who refused to adopt AI in their workflow (Forklog).

I have had wins and losses with AI. On the good days, it has helped me navigate sprawling codebases, search for specifics that would otherwise demand a dozen Google searches, and produce examples that illuminate the path forward faster than any meeting ever could. On the bad days, it slows me down. I can feel the drag of poor context, or a suggestion that leads nowhere useful. That is the reality of a tool still finding its best fit.

And that is exactly why the obsession with mandating and measuring feels off. AI’s effectiveness is nuanced. Some problems lend themselves to LLMs, others do not. Some people thrive with these tools, others bristle. Instead of enforcing usage quotas, we should be standardizing the environments these tools operate in. Clear documentation, context-rich repos, shared strategies. Think of llms.txt, AGENTS.md, or the way Cursor, Claude Code, and similar engines digest a codebase. If organizations want real productivity gains, the focus should be on feeding tools the right diet of context and ensuring knowledge is well documented. That is something we should have been doing for humans all along.

There is also something underappreciated about joy. When a tool excites you, when it clicks with your brain, you get a productivity boost simply because you are enjoying the work more. Even if another tool might be objectively faster, if you hate it, you will slow down. Standardization makes sense when collaboration demands it, such as Linear over Jira, Notion over Confluence, or Slack over email. But enforcing AI usage does not sit in that same category. It risks ignoring the human side of productivity: people do their best work when they have tools they like, not ones they are forced to log prompts in for a quota.

In the age of AI, we cannot lose sight of what actually makes humans perform better. If we want AI to assist with that, the focus should be on how we design AI to help, not how we force humans to use AI. That is the nuance. The real work is in making our shared tools better. How do we keep Linear or Jira more organized so everyone benefits? If AI is the collaborative tool, then we should be asking how to improve it at the organizational level. A better tool for the organization becomes a better tool for every member of the organization, without mandates. When Linear is tidy, everyone wins. When it is a mess, nobody does.

Recommending a tool that solves a problem is one thing. Forcing it is another. If a new workflow really is easier and more productive, people will choose it on their own. That is what humans do. Getting something done is always more fun than being stuck. But productivity has thresholds. Saving three hours might not feel all that different from saving four. Cutting a week down to six days might not matter either. At some point, the difference blends. What matters is whether people actually enjoy the tool. Everyone loves a tool that takes a five-month slog and turns it into one second. That is a no-brainer. But in the smaller margins, preference and joy carry more weight.

Still, companies are rolling out monitoring systems to enforce usage. Tracking tools now measure prompt frequency, workflow adoption, and departmental uptake (Teramind, Worklytics). Surveys show adoption rates are being baked into performance metrics (Business Insider). Some organizations even tie headcount requests to AI efficiency, as Shopify now requires managers to prove why AI cannot do the work before hiring (CNBC).

The risk is obvious. If AI mandates become another checkbox, another metric to optimize, then we will miss the real benefit of these tools. AI should make humans better, not force humans into proving their worth through token counts.

Further Reading

- Microsoft: AI is no longer optional

- Forbes on Microsoft performance reviews

- Shopify CEO statement

- Shopify headcount rules

- Tobi Lütke on X

- Coinbase employee terminations

- Coinbase AI usage stats

- Duolingo layoffs

- Duolingo CEO clarifies AI strategy

- Duolingo productivity claims

- Zapier mandate

- Washington Post overview

- Business Insider survey

- Teramind on AI tracking

- Worklytics on AI adoption metrics

Enjoyed this post?

Follow me for more content like this.